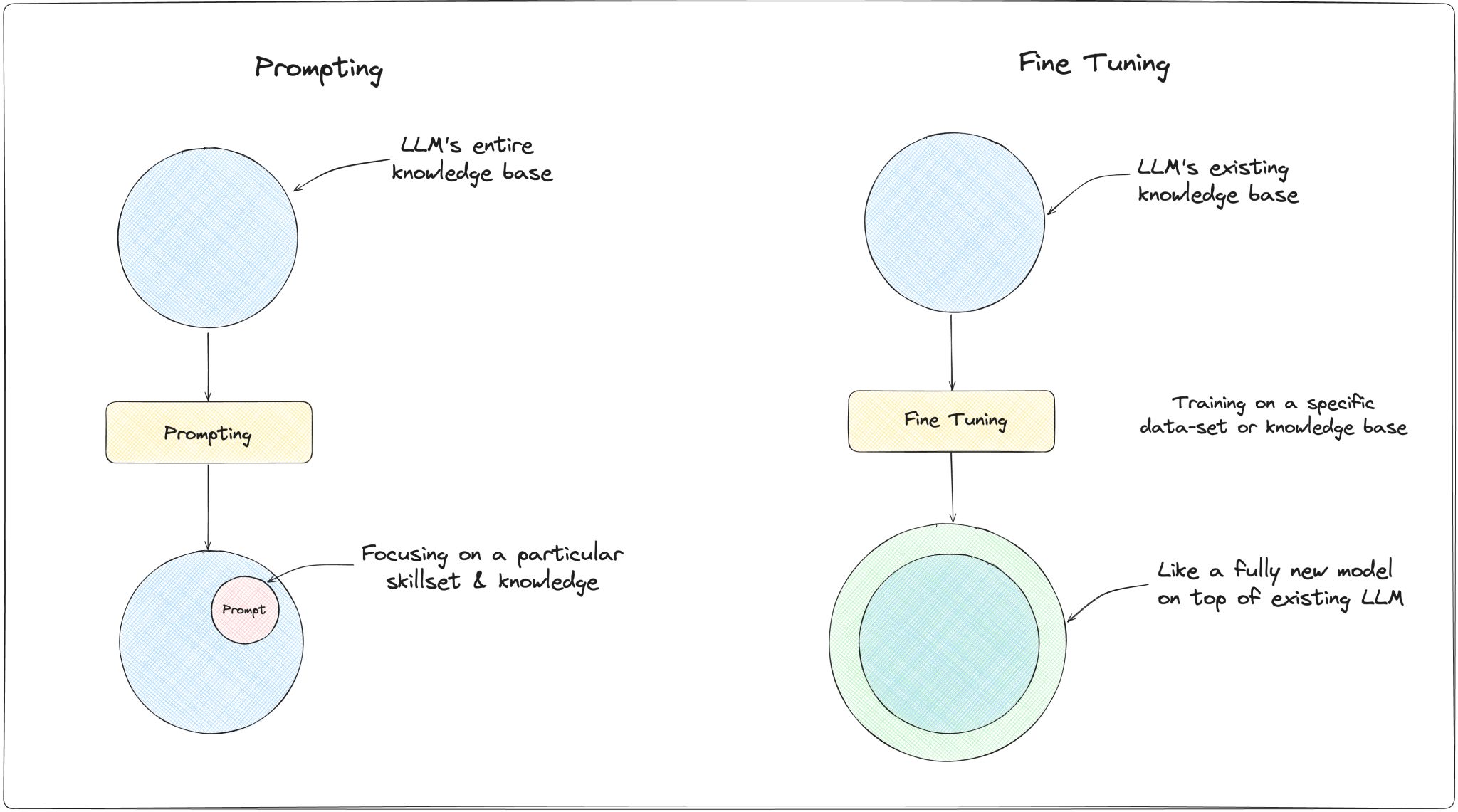

Prompting or Fine-tuning on LLMs?

When to use prompting vs fine-tuning for your AI product.

A while ago, I was having a conversation with a founder looking to add AI to for digital marketing web based app.

His concern was that a good prompt with LLM can also do what they are building. So he was thinking of creating their own model.

Realising their product was relatively new and building a model from scratch would only cost them more money and time, I recommended fine-tuning existing LLMs.

What is fine-tuning LLMs?

In simple terms, fine-tuning means training the LLM with your own custom data, resulting in like a new custom LLM very focused to your data only.

There are tools like Hugging Face while helps make this easier, and a few months ago, OpenAI released an API for the same too. Just send it the data/documents, and a fine-tuned custom GPT model will be yours to use!

Recommendation for Startups

If you are a startup looking to integrate AI in your product, you should look at fine-tuning as a very strong option to move fast and validate.

You can always come back to creating your own model once you get PMF and are in a better situation. I've covered the points about this in an earlier post: When to re-invent the wheel?